XML sitemaps serve a very niche purpose in search engine optimization: facilitating indexation. Posting an XML sitemap is kind of like rolling out the red carpet for search engines and giving them a roadmap of the preferred routes through the site. It’s the site owner’s chance to tell crawlers, “I’d really appreciate it if you’d focus on these URLs in particular.” Whether the engines accept those recommendations of which URLs to crawl depends on the signals the site is sending.

What Are XML Sitemaps?

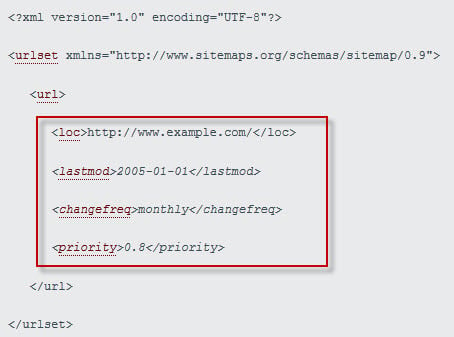

Simply put, an XML sitemap is a bit of Extensible Markup Language (XML), a standard machine-readable format consumable by search engines and other data-munching programs like feed readers. XML sitemaps convey information about one thing: the URLs that make up a site. Each XML sitemap file follows the same basic form. A one-page site located at www.example.com would have the following XML sitemap:

Sample XML sitemap file Enlarge This Image

Enlarge This Image

The XML version and urlset are the same for every XML sitemap file. For each URL listed, a <url> and <loc> tag are required, with optional <lastmod>, <changefreq> and <priority> tags. The URL information, outlined in red above, indicates the information that changes for each URL. The <loc> tag simply contains the absolute URL or locator for a page. <Lastmod> specifies the file’s last modification date. <Changefreq> indicates the frequency with which a file is changed. <Priority> indicates the file’s importance within the site. Avoid the temptation to set every URL to daily frequency and maximum priority. No multi-page site is structured and maintained this way, so search engines will be more inclined to ignore the whole XML sitemap if the frequency and priority tags do not reflect reality.

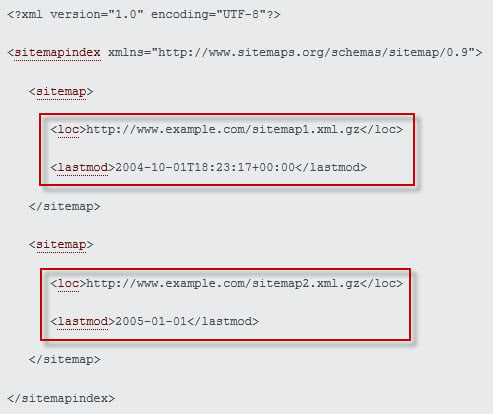

The URLs in an XML sitemap can be on the same domain or different subdomains and domains. However, each XML file can only contain 50,000 URLs per file and is limited to 10MB in size. To conserve bandwidth and limit file size, XML sitemaps can be compressed using gzip. When a site contains more than 50,000 URLs or reaches 10MB, multiple XML sitemaps need to be generated and called together from an XML sitemap index file. In the same way an XML sitemap lists URLs in a site, the XML sitemap index lists XML sitemaps for a site. The areas to modify for each XML sitemap listed are outlined below:

Sample XML sitemap index file Enlarge This Image

Enlarge This Image

For more examples of XML sitemaps, peruse any site and enter sitemap.xml after the domain. For example, https://www.practicalecommerce.com/sitemap.xml is the XML sitemap index for this site. If adding sitemap.xml doesn’t work, the XML sitemap may be named differently. Try checking the robots.txt file to see if the XML sitemap address is there. For example, check out http://www.dell.com/robots.txt for a huge list of XML sitemaps.

What to Exclude

Because XML sitemaps serve as a set of recommended links to crawl, any noncanonical URLs should be excluded from the XML sitemap. Any URLs that have been disallowed in the robots.txt file — such as secure ecommerce pages, duplicate content, and print and email versions of pages — should also not be included in the XML sitemap. Likewise, any files that are excluded from the crawl by robots noindex meta tags and canonical tags should not be included in the XML sitemap. If the crawlers find URLs in the XML sitemap that have been purposely excluded from the crawl by one of these means, it sends a mixed signal. “Don’t crawl this URL. But do consider it more important than the other URLs on my site.” The crawlers will obey the crawl exclusion commands issued by robots.txt disallows and meta robots noindex. But if enough of these mixed signals are present, the XML sitemap may be discredited and lose its recommending ability.

How to Create XML Sitemaps

In the simplest cases, small sites can easily create and post their own XML sitemaps manually using the examples above as formatting guides. For example, a very small ecommerce site might consistently offer the same five products for six months. The URLs for the site don’t change even though they may update content on the site every month or so to keep it feeling a bit fresh. This five-product site could easily create a text file in notepad with the format of an XML sitemap, and save that file as sitemap.xml. All that remains is to post the sitemap.xml to the root of the site, and the XML sitemap is live. In six months when the products change, they would simply update the sitemap.xml file and repost it to the root.

For larger sites and sites that change more frequently, plugins or modules available for many ecommerce platforms can automate the creation and posting of XML sitemaps. Sites built on Drupal or WordPress can use all-in-one XML sitemap plugins like Drupal’s XML Sitemap Module or Better WordPress Google XML Sitemaps to generate and post their files on a regularly scheduled basis. If a site’s platform doesn’t include support for automating XML sitemaps, freeware programs like gSite Crawler can automate the creation and posting of XML sitemaps via FTP. Look for an XML sitemap program that obeys robots exclusion protocols like robots.txt disallows and meta robots noindex tags to ensure that excluded files do not end up in the XML sitemap.

For more detailed information on XML sitemaps, see Sitemaps.org.

Promoting XML Sitemaps

XML sitemaps require promotion. Fortunately, their intended audience is so small that a couple of quick steps is all it takes. First, make the XML sitemap autodiscoverable by adding the following line anywhere in the site’s robots.txt file: Sitemap: http://www.example.com/sitemap-index.xml

When reputable crawlers visit a site they make the robots.txt their first stop to identify which files should be crawled and which should be avoided. In the process the crawler identifies the XML sitemap from the autodiscovery line and makes that XML file its second stop. From there it proceeds with the rest of its crawl, armed with the site’s recommended files.

The second step in promoting an XML sitemap is submitting to Google’s and Bing’s webmaster tools sites. The autodiscovery line will ensure that Googlebot and Bingbot find the XML sitemap on their next visit, which could be a month from now depending on the site’s crawl frequency. Submitting the XML sitemap directly to their tools sites prompts them to crawl the sitemap more quickly, usually within the next few hours. In addition, the engines’ tools sites provide additional data on the URLs in the XML sitemap, such as how many are indexed and whether the XML sitemap itself is valid.

XML Sitemap Myths

Because they’re a little obscure, XML sitemaps have collected an interesting set of mythical powers and superstitions. These are some of my favorite questions and objections regarding XML sitemaps.

-

“Including a URL in the XML sitemap guarantees it will be indexed.”

No. It’s important to note that XML sitemaps are only recommendations. The XML sitemap will not guarantee indexation of the URLs included. -

“If I leave a URL out of the XML sitemap it will get deindexed.”

No. The XML sitemap will not exclude indexation of URLs not included on the XML sitemap. It’s merely a set of recommended URLs that, if the recommendations agree with the signals the rest of the site is sending, will lend a bit of extra importance to the URLs included above and beyond the other URLs on the site. -

“XML sitemaps are difficult to create and maintain.”

No. In the simplest cases, small sites can easily create and post their own XML sitemaps manually using the examples above as formatting guides. For larger sites and sites that change more frequently, plugins or modules available for most ecommerce platforms can automate the creation and posting of XML sitemaps. -

“Posting an XML sitemap is like asking to get scraped and spammed.”

No. An XML sitemap is nothing more than a list of URLs. Scrapers and spammers can easily crawl any public site they wish to generate a list of URLs and content from which to steal a site’s content for their own nefarious purposes. They certainly don’t need an XML sitemap to do it, and not posting an XML sitemap won’t keep the scrapers and spammers away.