I’m often asked to help reverse drops in traffic from organic search. It usually occurs from major site developments, such as redesigns and ecommerce platform changes. Traffic drops can be frustrating. You need to get to the root of the problem quickly, to reverse declines in revenue.

For large traffic drops, I used to run a website through a full search engine optimization audit. The idea was to fix all issues identified in the audit, and hope traffic will return to normal. But that approach, I now believe, is not enough to uncover difficult issues. In “SEO: How to Quickly Reverse a Traffic Downtrend,” I explained an alternative approach to get to the root of traffic problems quickly.

But I’ve since found a simpler and faster approach, with good success.

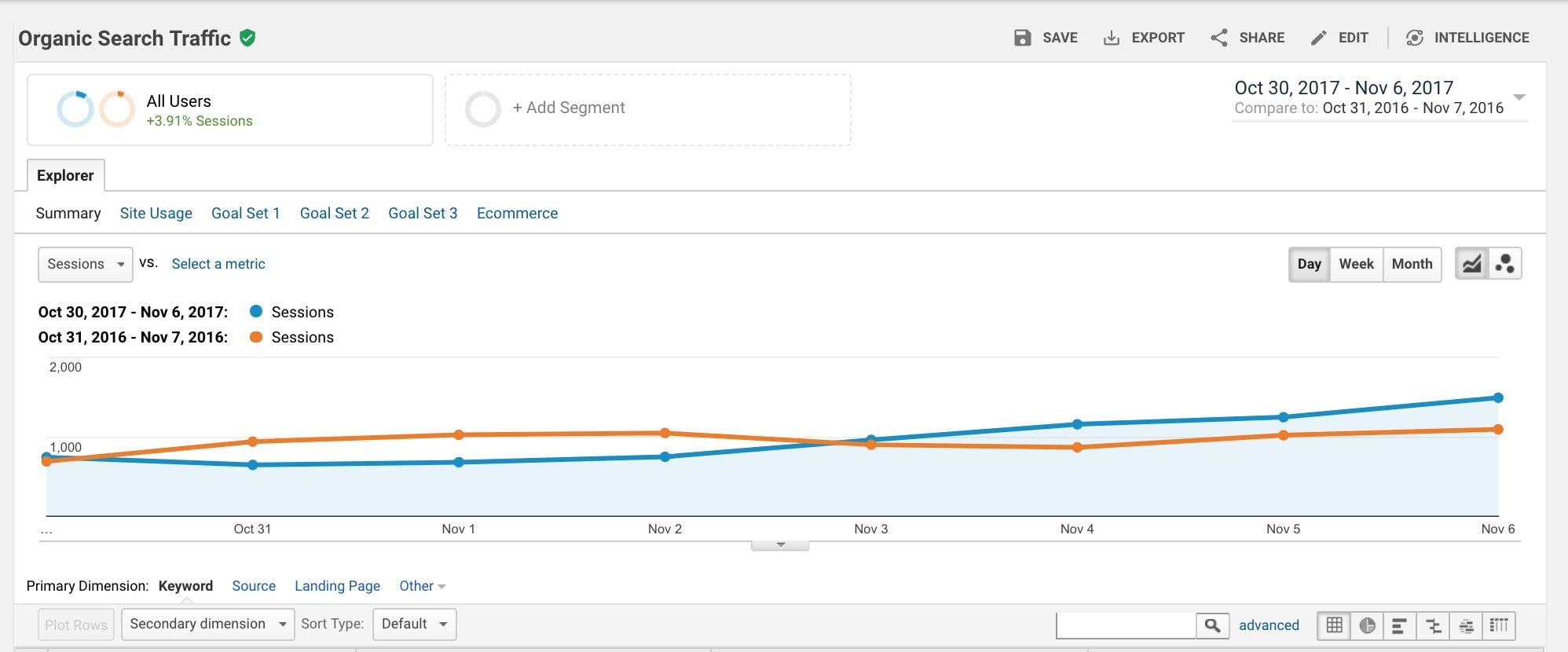

The idea is that instead of checking the entire site for SEO problems, we only check the pages that lost traffic during the time period that we see the drop. In the example below, the drop occurred from October 31 to November 2, immediately after the company migrated its website to full HTTPS.

October 31 was a Tuesday, and in the Google Analytics graph, below, I am comparing with November 1, 2016, which was also a Tuesday. This client is a retailer with a highly seasonal business. Year-over-year comparisons are the best way to analyze traffic fluctuations.

Traffic went down for a bit during reindexing, and then back up.

The client’s overall traffic was down for three days during the reindexing from HTTP to HTTPS. Then it went up, and increased above previous traffic levels. But still, certain pages lost traffic, so we can narrow our investigation to just those pages.

Organic Pages Losing Traffic

To narrow the issue, we will identify pages that lost traffic. We will use the Google Sheets add-on that I introduced in my previous article to pull the relevant data we need from Google Analytics.

Step 1. Pull organic search landing pages from Google Analytics for the previous time period, which is 2016 in this case.

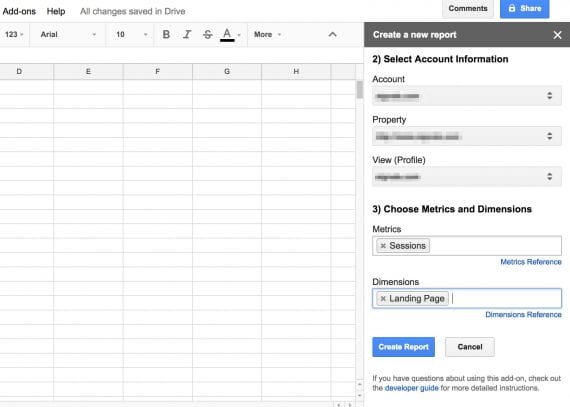

Create a blank Google Sheet, then go to Add-ons > Get add-ons > Google Analytics. After you complete the authorization step, you will see a pop-up, as follows.

After creating a Google Sheet, go to Add-ons > Get add-ons > Google Analytics to get this pop-up.

Note that we only need Sessions and Landing Page to pull the data we need. Click on “Create Report.”

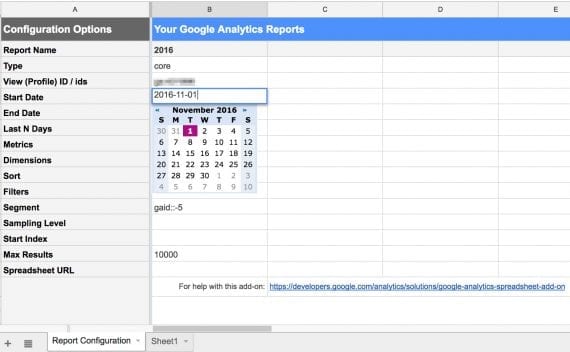

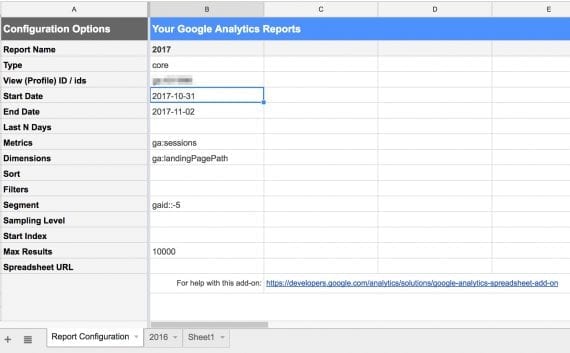

Step 2. Prepare the report that will fetch the 2016 data.

Enter the date using the YYYY-MM-DD format.

I named the report “2016” under the report configuration tab, and entered the dates using the format YYYY-MM-DD, where YYYY is the year using four digits, MM is the month using two digits, and DD is the day using two digits. Enter any date, and double-click on the cell to get a nice calendar like you see in the picture. Remember to include the organic search segment, which is identified above by “gaid::-5”, and also set the “Max Results” to 10,000. You can use the “Start Index” field to iterate over sets of pages greater than 10,000.

Then go to Add-ons > Google Analytics > Run reports to get the pages for the 2016 date range. You will get a new sheet tab named “2016” containing the report.

Step 3. Update the report configuration tab to fetch the 2017 data.

Change only the report name (i.e., “2017”), and alter the dates.

Note that we only need to change the report name (i.e. “2017”), and alter the dates. Click on “Create Report” to get the 2017 landing pages.

Step 4 (optional). Sometimes the URLs won’t match in a landing page comparison because of a site redesign or replatform.

A simple solution for this is to spider the pages in the previous dataset, follow the redirects (assuming there are URL mappings in place), and use the final landing pages as the pages we need to compare. Again, this step is necessary only where you have URL changes between the comparison date ranges.

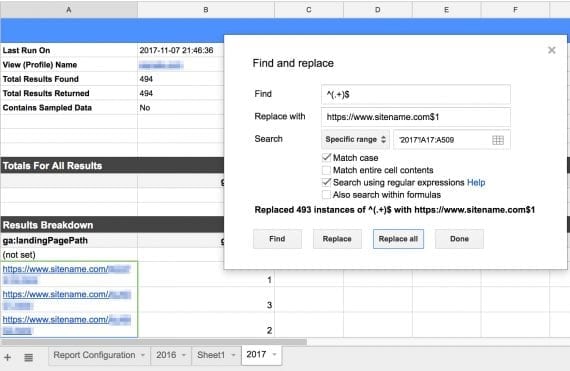

Under ga:landingPagePath, in the “Results Breakdown” section of the report, are all the pages, but they are relative. Convert them to absolute by adding your full website name.

Convert the pages from relative to absolute by adding your full website name.

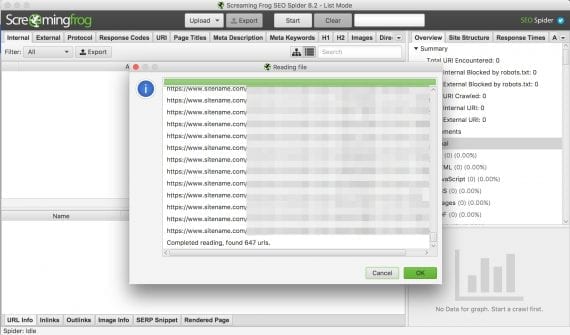

Next, select the list of full URLs to spider and copy them to the clipboard, and paste them into an SEO spider, such as Screamingfrog.

Select the list of full URLs to spider and copy them to the clipboard, and paste them into Screamingfrog or an equivalent spidering tool.

Then export the list of final, 200 OK pages from Screamingfrog to a CSV file, and import that back to another tab in the Google Sheet. Also, export pages from Screamingfrog that return 404 errors so you can address them immediately (by adding 301 redirects).

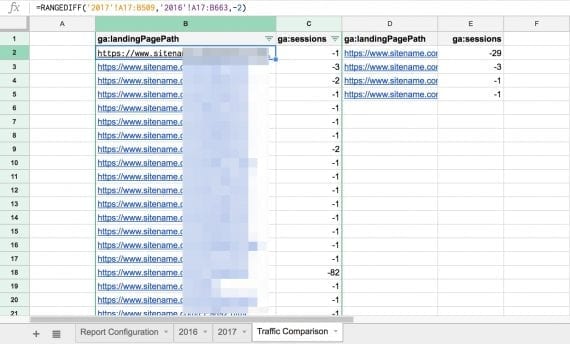

Step 5. Now that we have both sets of pages — 2016 and 2017 — we get to the fun part. We will create a custom Google Sheets function to find the pages that lost traffic.

Go to Tools > Script editor and paste this code in the script window. Then save it as RANGEDIFF. If you have other Google Sheets scripts, create a new file and save it there.

The custom script adds a new Google Sheet function called RANGEDIFF, which filters and returns the list of pages that have lost traffic, and the magnitude of the loss.

The script uses three parameters. The first two are the 2017 range of full URLs, followed by their session count differences; and the 2016 range of full URLs, also followed by their session count differences.

The third parameter is a flag to control the set of results we return. If the parameter is set to -2, we will get the pages that received traffic in 2016, but not 2017. If we set it to -1, we will get the pages where the traffic difference is negative. If we set it to 1, we will get the set of pages where the traffic difference is positive; and if we set it to zero, we will get pages that have no change in traffic. You can also set it to 2, to get the pages that had traffic in 2017 and none in 2016.

In the screenshot below we set the third parameter to -2. Thus the first two columns list the pages that had some traffic in 2016 and none in 2017. The second set of columns gives us the pages that had traffic in both years, but experienced a decline in 2017. We see them by setting the parameter to -1.

The first two columns list the pages that had some traffic in 2016 and none in 2017. The second set of columns give us the pages had traffic in both years, but experienced a decline in 2017. We see them by setting the parameter to -1.

This technique can also be used to find the pages that increased traffic to learn what SEO tactics are working each week or month.

Recommended

Remembering Hamlet Batista

February 8, 2021

Step 6. Now that we have the list of pages that lost traffic, we can proceed to spider them following the same steps listed in Step 4, and look for specific SEO issues. For example, do they have problems such 404s, or blank pages? Are they missing important meta info, such as canonical tags? Or are they causing redirect chains and loops?