This is the third installment of my “SEO 201″ series, following:

“SEO 201″ addresses technical, backend aspects of site architecture and organization. My 8-part “SEO 101″ series explained the basics of utilizing content on a website for search engines, including keyword research and optimization.

The technical side of search engine optimization is the most critical because it determines the effectiveness of all of the other optimization you do. All the content optimization in the world won’t help a page rank better if that page is using a technology that is invisible to search engine crawlers. So we must learn to experience our sites like search engine crawlers.

Rule 2: Don’t Trust Your Experience

Enabling search engines to crawl your site is the first step. But what the crawlers find while they’re there can vary dramatically from the site as you see it.

Strong technical SEO depends on your ability to question what you see when you look at your site, because the search engines access a much-stripped-down version of the experience you and your customers have. it’s not just the images that disappear for crawlers, though. Entire sections of a page or a site can disappear from view for crawlers based on the technologies the site is using behind the scenes.

For example, when you look at Disney Store’s home page you see a whimsical and thoughtfully designed page with many visuals and navigational options. But only if you’re a human with a browser that renders JavaScript and CSS.

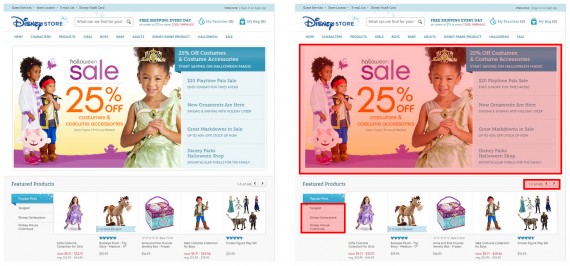

In the image below, the page on the left illustrates how you would see the page, and the red boxes on the page on the right outline the areas that aren’t visible to traditional search engine crawlers.

Disney Store’s home page as visitors see it (left) and with search unfriendly content in red on the right.

The content invisible to search engines consists of a carousel of promotional links to five category and promotional landing pages, plus links to 235 featured products. In the featured products section, only the five products immediately visible are crawlable. The other 235 products in this section of the page are navigable by pagination and tab links that can’t be crawled by traditional search engine crawlers.

In Disney Store’s case, the impact of this issue is relatively low because all of the content that crawlers can’t access in these sections of the home page are crawlable and indexable through other navigational links. It would have a major SEO impact if the uncrawlable sections were the only path to the content contained in or linked to in those sections.

SEO, CSS, JavaScript, and Cookies

Disney Store’s uncrawlable promotional and navigational elements rely on JavaScript and CSS to display. I’ve worked with many major-brand ecommerce sites that run into SEO issues with their product catalog because their navigation relies on CSS and JavaScript. Once I worked with a site whose navigation relied on cookies to function properly.

Search engine crawlers are unable to accept cookies and traditionally do not crawl using CSS and JavaScript. Flash, and iframes. Most other technologies that enable engaging customer experiences are likewise either not crawlable or only minimally visible. Consequently, content and navigation that requires these technologies to render content and links will not be accessible to traditional search engine crawlers.

In organic search, no crawl means no indexation, no rankings, and no customers.

The solution is to develop the site using progressive enhancement, a method of development that starts with basic HTML presentation (text and links) and then layers on more advanced technologies for the customers whose browsers have the ability to support them. Progressive enhancement is good for accessibility standards as well as SEO, because the browsers used by some blind and disabled customers tend to have similar capabilities as search engine crawlers.

You may have noticed that I said “traditional search engine crawlers” several times. Some crawlers do possess more technical sophistication, while some are still essentially just text readers. For example, Google deploys some headless browsers, crawlers that are able to execute JavaScript and CSS. These headless browsers test sites for forms of spam that attempt to take advantage of a traditional text crawler’s blindness to CSS and JavaScript. For example, the SEO spam tactic of using CSS to render white text on a white background to hide lists of keywords would be easy for a headless browser to sniff out, enabling the search engine to algorithmically penalize the offending page.

However, because search engines still use old-school text-based crawlers as well, don’t risk your SEO performance on the small chance that every search engine crawler that comes to your site is a headless browser. Make sure that your site is navigable and still contains the content it needs when you disable cookies, CSS, and JavaScript. To learn how, see “SEO: Try Surfing Like a Search Engine Spider,” a previous article. This is the best way to shed your marketer’s perception of your site and understand how search engines really see it.

Developing a site that allows all search engines to crawl and index the content you want to rank is the best way to improve SEO performance.

SEO and Geolocation

Geolocation SEO issues can be the hardest to discover because you can’t disable geolocation with a browser plugin like you can JavaScript. Geolocation is applied to a user’s experience without notification, making it harder for users to remember that it’s there affecting their experience of the site differently than the crawlers’ experience.

The fact that you can’t see the difference doesn’t mean it’s not there. In the extreme cases I’ve worked with, all of the content for entire states or countries were inaccessible to search engines.

Geolocation can be problematic because Google crawls from an IP address in San Jose, CA, and Bing crawls from Washington state. As a result, they will always be served content from their respective cities. If bots are only allowed to receive content based on their IP addresses, they can’t crawl and index content for other locations. Consequently, other content from other locations won’t be returned in search results and can’t drive organic search traffic or sales.

Still, geolocation can be incredibly valuable to customer experience, and can be implemented in such a way that it doesn’t harm SEO. To ensure rankings for every location, sites must offer a manual override that allows customers and crawlers to choose any of the other available locations via plain HTML links. The customary “Change Location” or flag icon links that lead to a list of country or state options accomplish this goal well.

In addition, visitors should only be geolocated on their entry page. If geolocation occurs on every page, customers and crawlers will be relocated based on their IP-based location with every link they choose.

SEO and Your Platform

No one sets out to build an ecommerce site that can’t be crawled to drive organic search sales. The problem is that seemingly unrelated decisions made by smart people in seemingly unrelated meetings have a major impact on technical SEO.

The platform your site is built on has quirks and features and ways of organizing and displaying content that can improve or impair SEO. Right out of the box, even the most SEO-friendly platform imposes restrictions on how you can optimize your site.

For example, some platforms are great for SEO until you get to the filtering feature in the product catalog. Unfortunately, some really important product attributes that customers search for are hidden in those filters. Since the platform doesn’t allow filter pages to be optimized, those valuable filter pages won’t be able to win rankings for the phrases searchers are searching for, and can’t drive organic search sales without some custom coding.

Unfortunately, each platform’s restrictions are different and very little detailed information exists on how to optimize around those restrictions.

To investigate your platform, try two things. First, read the article “SEO: Try Surfing Like a Search Engine Spider” and use the browser plugin recommended to browse around your site for 20 minutes. If there are areas you can’t access or times when you can’t tell immediately what the page is about, you probably have some SEO issues.

Next, analyze your web analytics organic search entry pages report and Google Webmaster Tools “Top Pages” report. Look for what’s missing from these reports: Are there page types or sections of the site that aren’t getting the organic search traffic they should?

Both of these investigative angles can reveal technical issues like the ones covered in this article. Huddle up with your developers to discuss and brainstorm solutions. Another option is to seek out the services of an SEO agency known for their experience with ecommerce optimization and platform implementations.

For the next installment of our “SEO 201″ series, see “Part 4: Architecture Is Key.”