In “SEO 201, Part 1: Technical Rules,” my article last week, I posited three technical rules that are critical to tapping into Google’s ultimate power as an influencer of your customers. The first, and most, important rule is that crawlability determines findability.

Rule 1: Crawlable and Indexable

If you want your site or section of your site to drive organic search visits and sales, the content must be crawlable and indexable.

Whether or not the search engines’ crawlers can access your site, then, is a gating factor to whether or not you can rank and drive traffic and sales via organic search. Search engines must be able to crawl a site’s HTML content and links to index it and analyze its contextual relevance and authority.

When crawlers cannot access a site, the content does not exist for all search intents and purposes. And because it does not exist, it cannot rank or drive traffic and sales.

It’s in our best interests, obviously, to ensure that our gates are open so that search engine crawlers can access and index the site, enabling rankings, traffic and sales.

Each of the following technical barriers slam the gate closed on search engine crawlers. They’re listed here in order of the amount of content they gate, from most pages impacted to least.

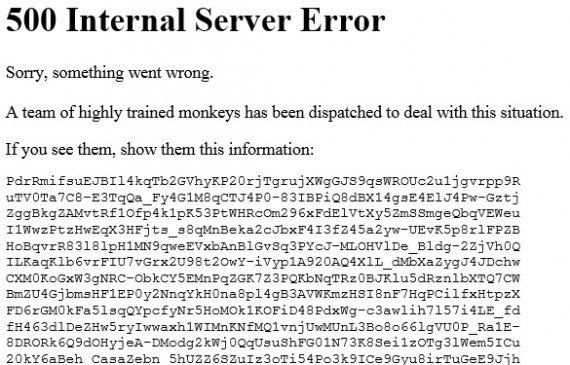

Site Errors

The biggest gating factor for crawlers is missing content and sites. If the site is down or the home page returns an error message, search engine crawlers will not be able to start their crawl. If this happens frequently enough search engines will degrade the site’s rankings to protect their own searchers’ experience.

The most frequently seen errors bear a server header status of 404 file not found and 500 internal server error. Any error in the 400 to 500 range will prevent search engines from crawling some portion or all of your site. The team that manages your server knows all about these errors and works to prevent them, but 400-range errors in particular tend to be page-specific and more difficult to root out. When you encounter 400- or 500-range error messages, send them to your technical team. Google Webmaster Tools provides a handy report that shows all of the errors its crawlers have encountered.

YouTube’s humorous 500 internal server error message.

Robots.txt Disallow

Robots.txt is a small text file that sits at the root of a site and requests that crawlers either access or not access certain types of content. A disallow command in the robots.txt file would tell search engines not to crawl the content specified in that command.

The file first specifies a user agent — which bot it’s talking to — and then specifies content to allow or disallow access to. Robots.txt files have no impact on customers once they’re on the site; they only stop search engines from crawling and ranking the specified content.

To understand how to use robots.txt, here’s an example. Say a site selling recycled totes — we’ll call it RecycledTotes.com — wants to keep crawlers from accessing individual coupons because they tend to expire before the bulk of searchers find them. When searchers land on expired coupons, they are understandably annoyed and either bounce out or complain to customer service. Either way, it’s a losing situation for RecycledTotes.com.

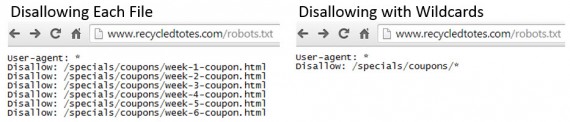

A robots.txt disallow can fix the problem. The robots.txt file will always be at the root, so in this case the URL would be www.recycledtotes.com/robots.txt. Adding a disallow for each coupon’s URI, or disallowing the directory that the individual coupons are hosted in using the asterisk as a wildcard, would solve the problem. The image below shows both options.

Example of a robots.txt file using disallow commands.

The robots.txt protocol is very useful and also very dangerous. It’s easy to disallow an entire site accidentally. Learn more about robots.txt at http://www.robotstxt.org and be certain to test every change to your robots.txt file in Google Webmaster Tools before it goes live.

Meta Robots NOINDEX

The robots metatag can be configured to prevent search engine crawlers from indexing individual pages of content by using the NOINDEX attribute. This is different than a robots.txt disallow, which prevents the crawler from crawling one or more pages. The meta robots tag with the NOINDEX attribute allows the crawler to crawl the page, but not to save or index the content on the page.

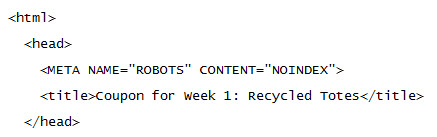

To use, place the robots metatag in the head of the HTML page you don’t want indexed, such as the coupon pages on the RecycledTotes.com, as shown below.

Example of a meta robots tag using the NOINDEX attribute.

Most companies place the tag somewhere near the title tag and meta description to make it easier to spot the search-engine-related metadata. The tag is page-specific, so repeat for every page you don’t want indexed. It can also be placed in the head of a template if you want to restrict indexation for every page that uses that template.

It’s generally difficult to accidentally apply the meta robots NOINDEX attribute across an entire site; so this tactic is generally safer than a disallow. However, it’s also more cumbersome to apply.

For those ecommerce sites on WordPress: It is actually very easy to accidentally NOINDEX your entire site. In the WordPress’ Privacy Settings, there’s a single check box labeled “Ask search engines not to index this site” that will apply the meta robots NOINDEX attribute on every page of the site. Monitor this checkbox closely if you’re having SEO issues.

Like the disallow, the meta robots noindex tag has no impact on your visitors’ experience once they’re on your site.

Other Technical Barriers

Some platform and development decisions can inadvertently erect crawling and indexation barriers. Some implementations of JavaScript, CSS, cookies, iframes, Flash, and other technologies can shut the gate on search engines. In other cases these technologies can be more or less search friendly.

This bleeds over into the second rule of technical SEO: “Don’t trust what you can see as being crawlable.” Next week’s post will address some of the ins and outs of these technical barriers.

For the next installment of our “SEO 201″ series, see “Part 3: Enabling Search-engine Crawlers.”